Reinventing data center site selection for the AI boom

The explosive growth of artificial intelligence has fundamentally changed site selection for data centers. The days of simply finding available land are over.

For developers and decision-makers, navigating this new environment means adapting. Selecting the right location is about more than just space; it involves managing risks like natural hazards, which are increasing with climate change, and working through complex regulations and permits.

However, for a project to last, finding the right land is just one of the three deeply interconnected pillars to master. Beyond that, developers must also ensure robust internet connectivity and sufficient power. In fact, securing grid interconnection has become the single biggest hurdle for data center projects. Getting just one of these wrong can lead to costly delays and budget overruns.

While point solutions exist for any of these factors, the single biggest hurdle for data center developers lies in managing them separately. The AI race demands quick project development, rapid pipeline growth, and efficient capital deployment. Thus, the real issue isn’t finding a viable site, but scaling your entire project pipeline to avoid wasting millions of dollars and valuable time on sites that won’t succeed.

To build the resilient digital infrastructure of tomorrow, you need a new approach — one that is integrated, scalable, and built for efficiency.

Watch our exclusive webinar, "Data center pipeline acceleration: scaling up site selection for the AI boom," to discover how to navigate this new landscape and build a strong project pipeline.

The three new pillars of data center site selection

1. Finding the right land

The sheer scale of modern data centers has transformed land acquisition. Where 25-40 acres were once sufficient, today's hyperscale facilities can require over 1,000 acres. But size is only the beginning of the challenge.

Developers must thoroughly assess the land's "developability," which means checking for how buildable the land is, including potential roadblocks like steep slopes (topography), environmental hazards, accounting for setbacks, etc., that could complicate construction.

Furthermore, to guarantee reliable "uptime", these sites must be resilient against natural disasters. This requires looking beyond outdated FEMA maps and analyzing both current and future risks with climate-adjusted flood and wildfire data.

Finally, navigating the web of local zoning regulations, environmental permits, and community acceptance for such massive projects is incredibly time-consuming. Attempting to manually vet hundreds of potential sites against these criteria is no longer feasible.

2. Ensuring robust connectivity

A data center inherently needs a fast, reliable connection to the internet. High-speed connectivity is the facility's circulatory system, requiring high-bandwidth, low-latency fiber optic cables to ensure constant data flow.

An ideal site is close to multiple major fiber routes from different providers. Think of it like having several highways leading to a city; this redundancy ensures constant data flow and creates competitive pricing among carriers. Another strategic factor to consider is proximity to "dark fiber" — unused fiber optic cables that are ready for future use and expansion.

.png)

Evaluating fiber routes and securing the optimal connection upfront allows for seamless expansion without costly and time-consuming construction down the road. While it's possible to extend fiber to a remote site, the process can increase costs and cause significant delays, making proximity to existing infrastructure a critical consideration from day one.

3. Finding power and workable electricity prices

In the current "power-first" era, accessing sufficient power has become the single biggest hurdle for new data centers. The scale of today’s hyperscale facilities means they can't just be "plugged in." A single gigawatt-scale campus can draw the equivalent energy to power an entire city, creating significant instability and further constraints on the existing grid.

This is why a transmission strategy is essential to the site selection process.

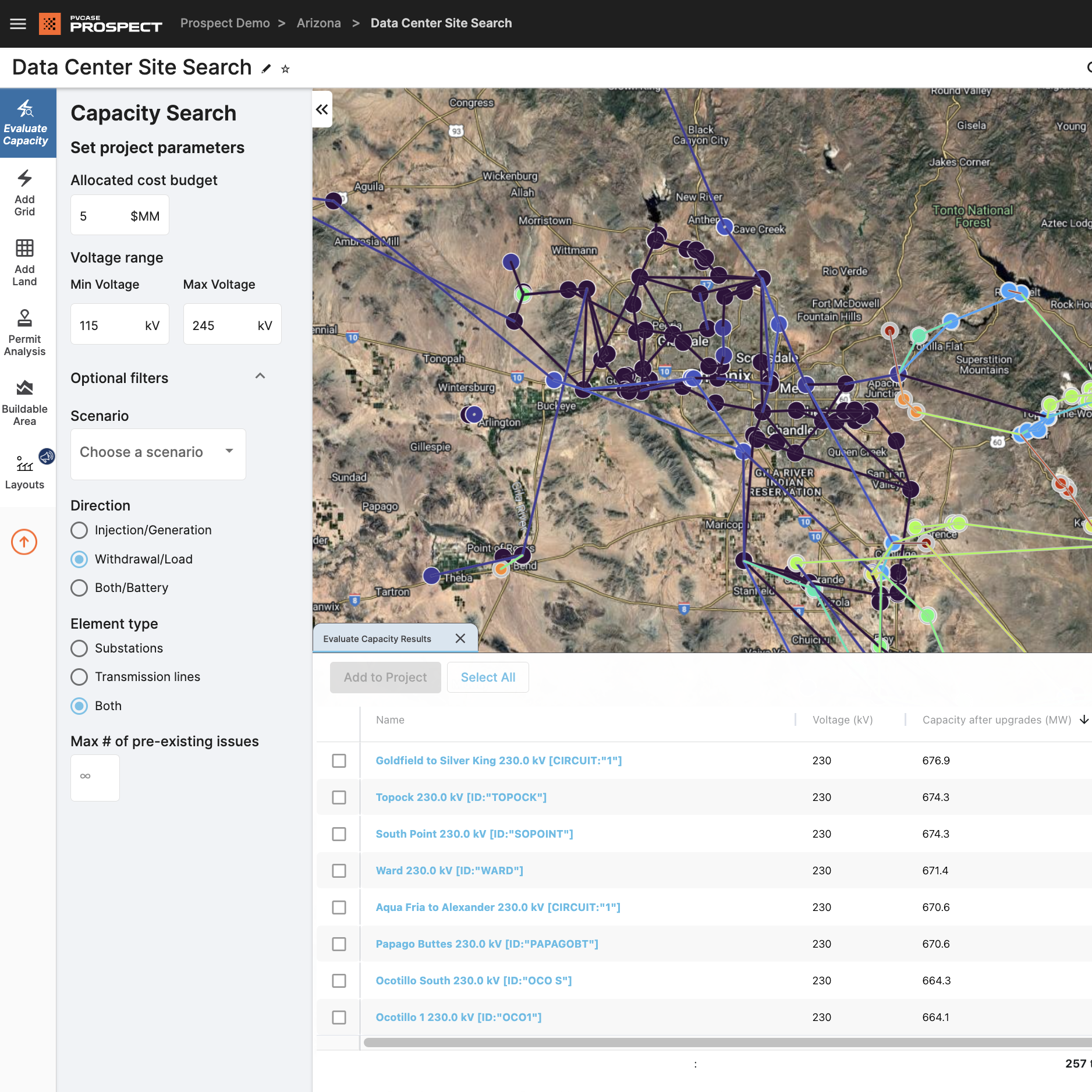

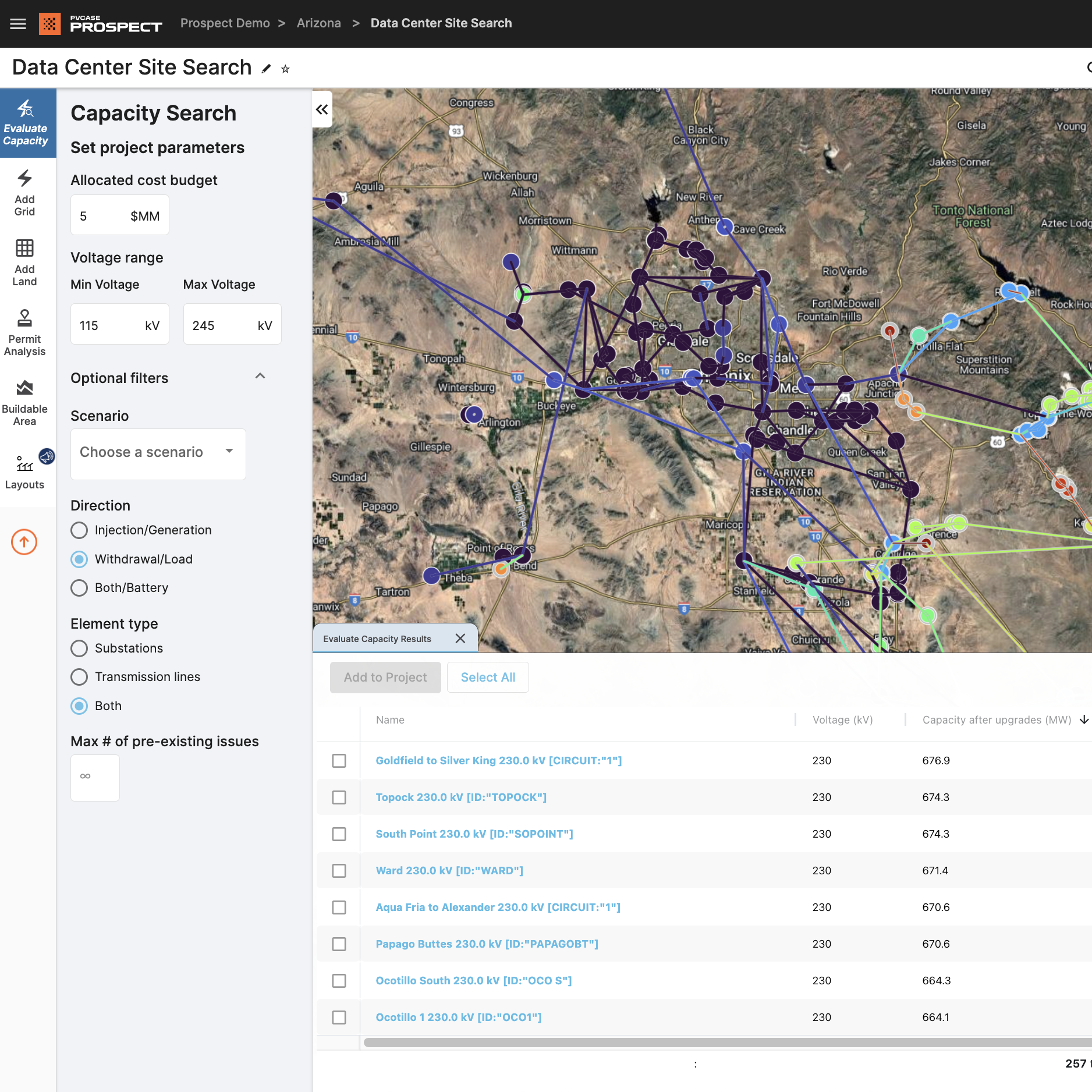

Pinpointing the less costly interconnection points with sufficient power

Large loads, like data centers, must apply for interconnection to the grid with the overseeing utility/grid operator. The grid operator, then, conducts a detailed study to determine if they can reliably supply sufficient power (offtake capacity) to the campus (both at the time of interconnection and in the future) and what infrastructure will need to be upgraded to handle “plugging in” such a large load (think: upgrading substations, transmission, and distribution equipment).

Utilities generally operate on a "you break it, you buy it" principle — the developer is responsible for any infrastructure upgrades needed. Essentially, getting any amount of power is technically possible, but it comes at a significant cost and with no guarantee of a workable timeline. Furthermore, critical equipment, such as high-voltage transformers, can have lead times of four to five years. Failing to analyze power availability and connection requirements early can completely derail a project.

This means project viability hinges not on absorbing these massive costs and delays, but on a developer's ability to strategically target areas with sufficient power at a workable interconnection cost.

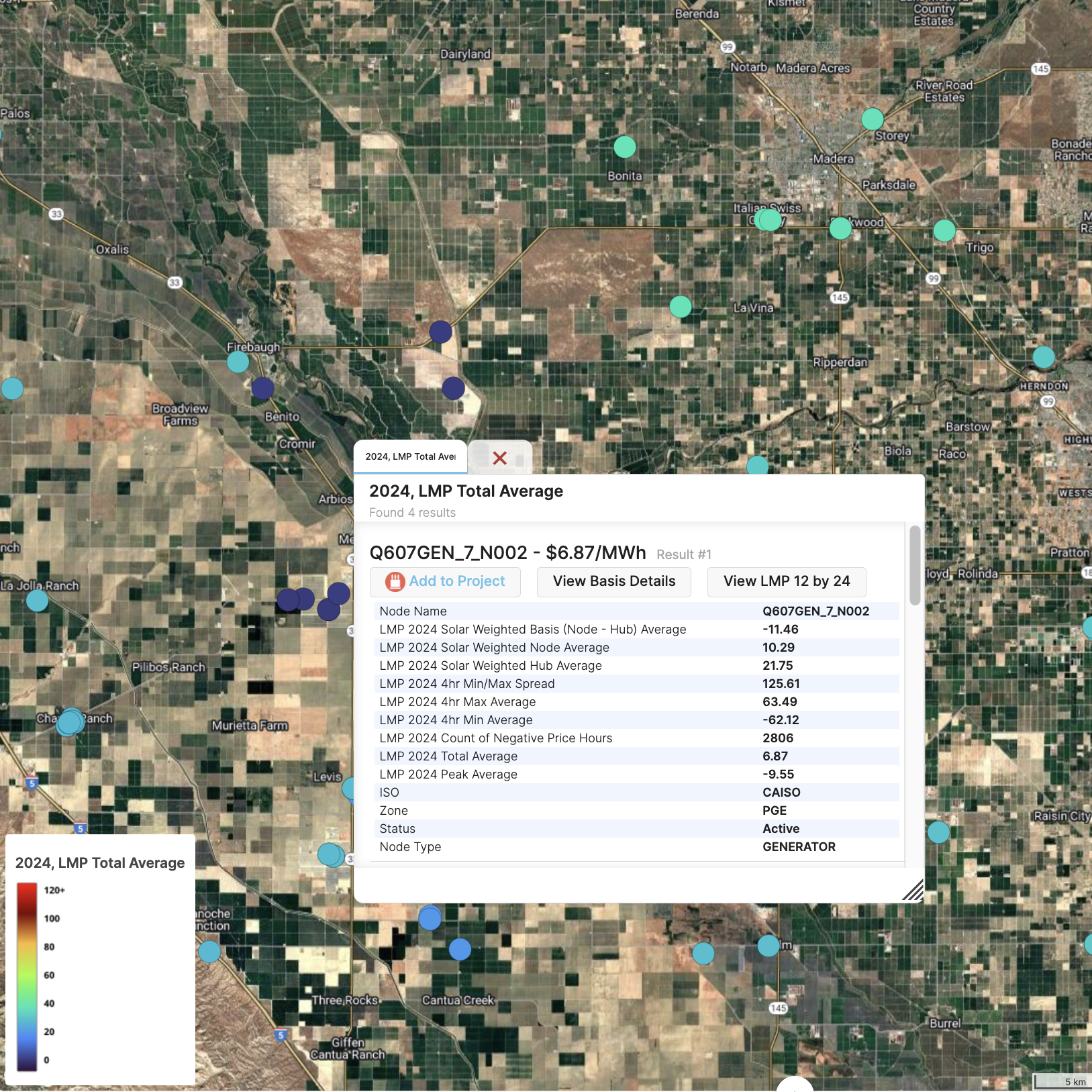

Understanding electricity prices

Additionally, since electricity can account for up to 60% of a data center's operational costs, understanding wholesale local electricity prices — evaluated through Locational Marginal Prices (LMPs) — is also essential.

For instance, you might find a site with sufficient grid capacity and a reasonable upgrade cost, only to discover that the energy prices are prohibitively high, making the entire project unviable in the long term. Given the significant pricing variability even within a single region (and scaled over 100s of megawatts), evaluating energy prices early is a crucial step to avoid spending valuable time and effort on a site that may never be cost-effective to run.

The truth is, your data center pipeline is only as fast as your ability to strategically identify and secure a grid connection at a practical cost, in an area with financially viable electricity prices.

The cost of sticking to the old playbook

The sheer scale of the AI boom has made it critical to move beyond traditional, piecemeal site selection practices. The old playbook, which relied on one-off consultant studies and disparate tools, is no longer feasible.

This process is slow and unworkable for several reasons:

Bespoke consulting studies for every potential site are prohibitively expensive and offer no guarantee of success.

Crucial grid and utility information is notoriously difficult to access, making it nearly impossible to conduct a comprehensive analysis early on.

Juggling different data sources and spreadsheets creates data risk, leading to delays.

In this fast-paced "gold rush," a slow process means being left behind as competitors secure the prime sites first.

Continuing with outdated methods is not just inefficient — it's a path to being left behind.

The modern solution: an integrated, scalable approach

The traditional, fragmented "scavenger hunt" for data center sites is officially over. The new reality demands an integrated, automated strategy that allows you to proactively analyze power, connectivity, and land developability— all at once, right from the start.

This holistic approach should enable you to work with both precision and speed by:

Pinpointing the areas that have financially viable energy prices and workable interconnection points.

Identifying nearby land with suitable acreage and fiber connectivity.

Moreover, this same strategy is also critical for screening inbound opportunities, allowing you to "fast-fail" — rapidly identify and reject non-viable sites — and focus your capital and resources only on projects with the highest probability of success.

Thus, the ideal solution for this new era must be able to:

Automatically assess land developability as well as resilience against current and future climate risks.

Integrate connectivity analysis from day one, seeing fiber routes, IXPs, and other key details to identify strategic networking.

Proactively analyze interconnection potential & and energy prices, revealing specific points of interconnection with sufficient power in the budget and with ongoing electricity prices that will ensure long-term financial viability before you invest in expensive due diligence.

Moving beyond old methods: your path forward

The time for reactive site selection is over. The AI boom requires a proactive, strategic approach to prevent decade-long delays and hundred-million-dollar budget overruns – all while scaling project pipelines fast

Join us to learn how to master this new reality and confidently build the resilient data center infrastructure needed for the future.

We'll cover these strategies in detail and answer your most pressing questions live. Register today to save your seat and gain the actionable insights you need to accelerate your data center pipeline.