The "power-first" era: a new playbook for data center projects

How to prioritize power to accelerate your project pipeline

The AI boom has fundamentally changed how we build data centers. Land, connectivity, and power are the three core pillars of any data center project, but the primary focus has moved to power.

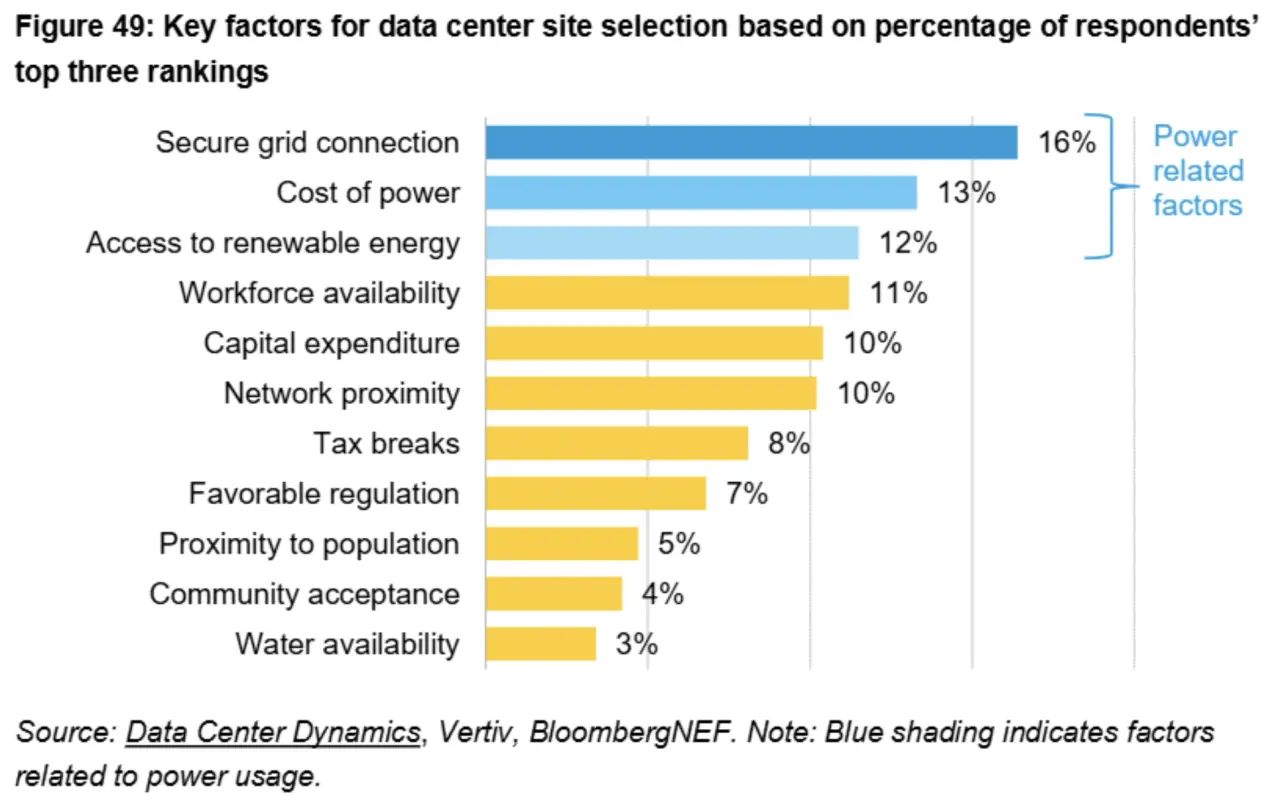

Securing sufficient power has become the single biggest bottleneck in the AI era. In fact, power is now considered the top priority for data center operators, with a recent survey from Cushman & Wakefield showing it's the top consideration for developers in the Americas.

Without accurately evaluating power, data center developers risk significant sunk costs from choosing land before securing power and long interconnection timelines that can kill a once viable project overnight.

For a project to succeed in this new environment, developers must adopt a proactive strategy that prioritizes power from day one. The AI race demands a new approach to site selection — one focused on finding viable power quickly to enable efficient capital deployment and achieve rapid pipeline growth.

To learn how to implement this strategy and gain a definitive edge in securing quality sites quickly, watch our exclusive on-demand webinar, "Data center pipeline acceleration: scaling site selection for the AI boom."

Why "power-first" isn't just a buzzword

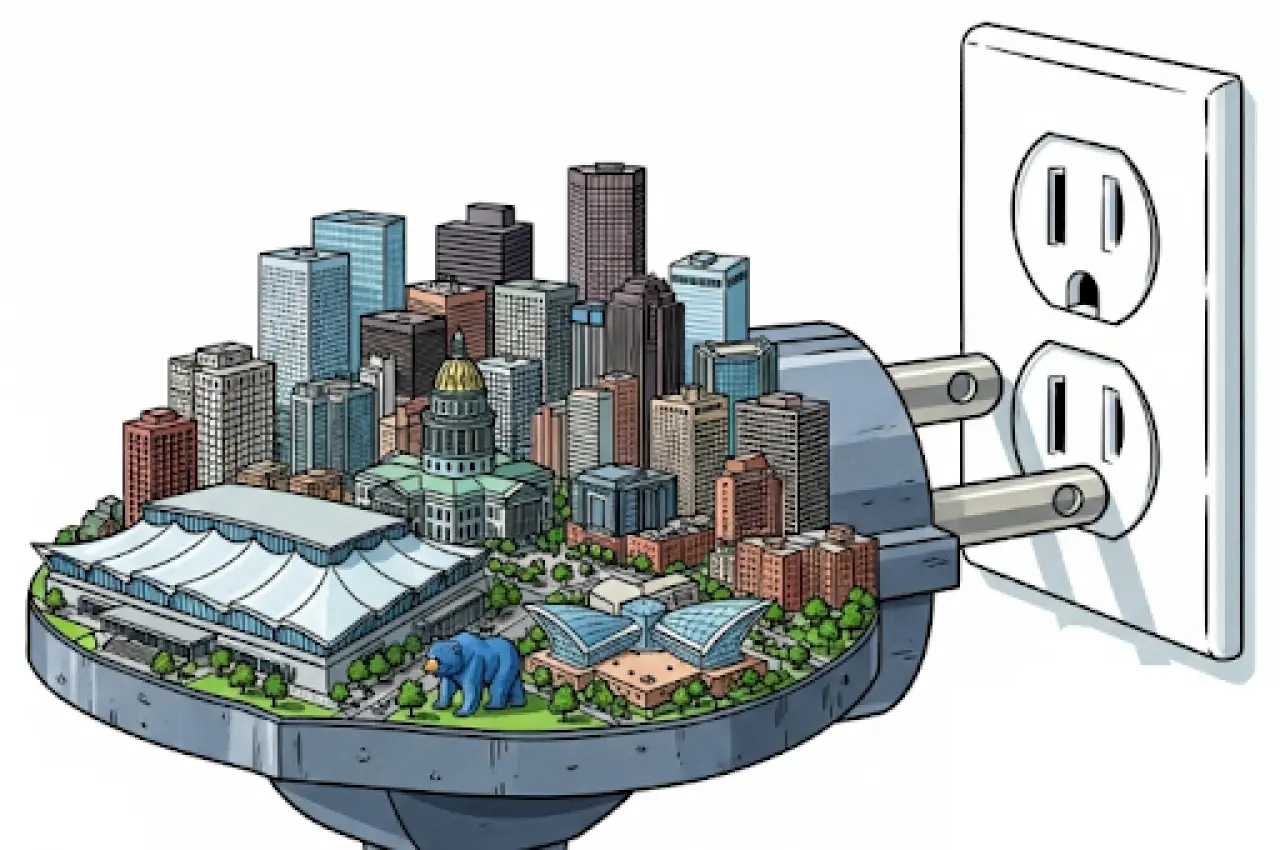

As a new 1-gigawatt campus can draw the equivalent energy to power an entire large city, developers clearly have to think beyond traditional real estate.

Siting a modern data center is now far more similar to developing an energy asset than a typical commercial real estate endeavor.

This is why a simple search for land is risky and outdated. For instance, one developer with a $20 million grid upgrade budget thought he had identified a promising site… only to discover during the utility interconnection study process that the required grid upgrades alone would cost $200 million.

Similarly, a developer who planned a campus to be built in just 2-3 years found that the power infrastructure would take a full decade to complete.

Beyond today's capacity: debunking the static interconnection myth

Large loads like modern data centers must go through the interconnection queue process with the overseeing grid operator, who operates on a "you break it, you buy it" principle: the developer is typically responsible for the massive and often unexpected costs of upgrading the grid to provide sufficient power to the campus.

There is a pervasive myth in the industry that a utility only needs to evaluate the current state of the grid to determine if there’s enough power (capacity) today to power a facility in order to greenlight interconnection; this is a fundamental misunderstanding of how grid operators evaluate large loads like data centers.

Utilities assess any project against a worst-case, forward-looking scenario. Once a data center is interconnected, utilities have to ensure they have enough generation planned for the future to continue powering the site.

Additionally, critical equipment like high-voltage transformers (4-5 year lead times) and high-voltage breakers (3-4 year lead times) must be planned for years in advance.

Plugging in the equivalent of a large city to the grid will inevitably require some grid upgrades, but developers can evaluate what types of upgrades they will trigger, the expected allocated cost, and evaluate other related constraints to target POIs that have a clearer path (and budget) to power.

Failing to analyze power availability within the context of upgrade costs early can completely derail a project before it even starts.

Instead of just absorbing these massive costs and delays, the key is to strategically target areas with sufficient power at a practical cost. But it doesn't stop there.

The nuances of electricity pricing

Understanding the nuances of power pricing is a critical step beyond securing a grid connection. The ongoing cost of electricity can make up to 60% of a data center's operational costs, so evaluating it early is essential.

This is where the difference between the retail electricity price (think: what you see per kWh on your monthly utility bill), Locational Marginal Prices (LMPs), and Power Purchase Agreements (PPAs) becomes critical:

LMPs represent the wholesale price of electricity at specific points on the grid, and they can vary dramatically within a small region. This is closer to what a data center will pay for electricity than the retail price.

Meanwhile, a PPA is a long-term contract signed with a utility or energy provider to secure a fixed or stable price for power.

Understanding these distinct prices is key to building a viable financial model and not wasting time on dead-end land.

Proactively analyzing historical LMP data can reveal powerful (pun intended) opportunities. Electricity prices, even within the same region, can be four times the price, spread across hundreds of megawatts or a gigawatt of power, which can make or break a project. Additionally, some areas experience frequent negative electricity prices due to excess energy generation versus demand, which can be a financial windfall for a data center.

Similarly, analyzing basis risk — the difference between a regional hub's price and the project’s specific interconnection point's price — can help you identify areas with less price volatility, signaling an ideal location that's less prone to costly congestion and constraints.

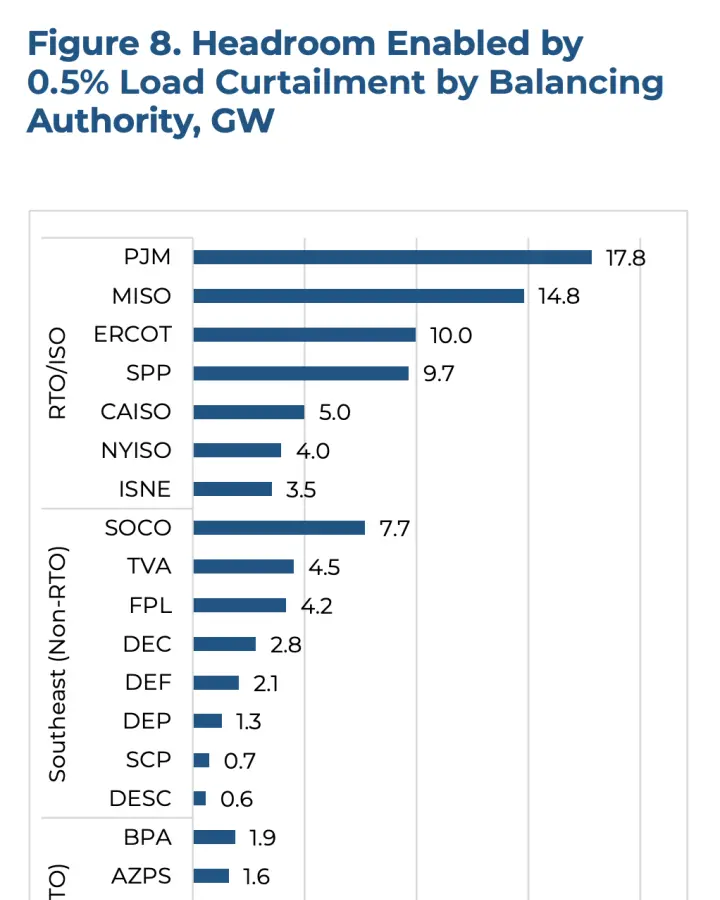

Overcoming grid constraints with flexible load

The grid is a dynamic system, and a utility's evaluation is based on a worst-case scenario that represents only a fraction of hours a year. During the remaining 99% of the time, there's often significant available power to be tapped. This motion created a clear opportunity to solve grid constraints, leading to new, innovative solutions.

One of the most promising is the flexible load strategy, in which developers work with utilities to become partners, not just consumers. This approach allows a data center to temporarily reduce its power draw during periods of peak grid stress — perhaps for just a few dozen hours a year.

In exchange for this flexibility, developers can secure a much faster path to interconnection that would otherwise be unavailable. Developers achieve this by reducing the compute load or switching to on-site battery storage or other on-site generation.

This shift from demanding firm power at all times to a flexible partnership is a game-changer. It not only speeds up timelines but also makes projects viable in constrained areas where they previously couldn't be built.

For a real-world example, Google recently announced a groundbreaking data center demand response program with TVA and Indiana Michigan Power. This program leverages the growing openness to flexibility with certain AI workloads, allowing them to be shifted to times when the grid is less strained. Google’s pioneering partnership with TVA and IMP provides a clear path forward for other developers.

A deeper dive on land and connectivity

While power is the biggest blocker, the complexities of land and connectivity have also evolved and need to be considered in an integrated approach when evaluating power.

Beyond basic acreage, a site’s developability has to be considered, including factors like topography/environmental constraints, easements, setbacks, and natural hazards. Developers may also need to evaluate proximity to key infrastructure like natural gas pipelines and water sources for backup power and cooling, which are vital for a resilient site.

The same granular detail applies to connectivity. A comprehensive strategy requires understanding existing fiber routes to evaluate redundancy and proximity. It also considers proximity to Internet Exchange Points (IXPs), which can improve performance,latency, and costs.

This level of detail translates into a slow, costly, and error-prone process when using traditional, one-off consulting studies or point solutions to evaluate land or connectivity. In today’s market, this means competitors securing the best sites first.

The real race isn't to find a single perfect site; it's to strategically accelerate your pipeline to find quality, high-potential sites fast and outpace the competition.

Instead of hoping to stumble upon one-off "golden nugget" sites, you need a way to systematically screen and target potential locations quickly and thoroughly. This demands a holistic, integrated approach to site selection from interconnection and origination through detailed land analysis

For an even deeper dive into these strategies, watch our exclusive on-demand webinar: "Data center pipeline acceleration: scaling up site selection for the AI boom."

Accelerate your data center pipeline

Ready to streamline your site selection process and scale your data center pipeline? Discover how our platform can help you find and de-risk your next project.